- Definition: System Design is the process of designing the architecture, components, and interfaces for a system so that it meets the end-user requirements.

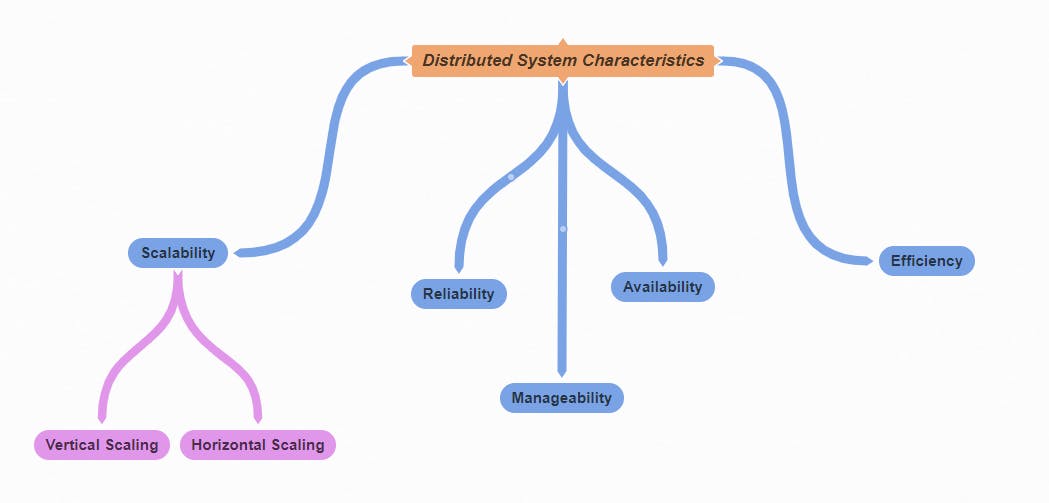

Characteristics of systems:

Scalability Scalability is the capability of a system to upgrade itself if demand is increased. System scaling can be done in two ways-

Horizontal Scaling- In Horizontal scaling, we add more servers of the same configuration to distribute the load on the server.

Vertical Scaling- In Vertical scaling, we add high-capacity components to the same machine/server in order to increase its capacity to handle the load on the server.

Reliability- The system is said to be reliable even if there is a failure it keeps delivering its services. In order to keep the system reliable, we can replace the failing machine with the new healthy one.

Availability- Availability is the time for a system to remain operational to perform its function over a specific time. If a system is available that does not means it's reliable.

Efficiency- Efficiency can be measured by two parameters - i) Latency- It's a delay/time to obtain any result/response after some action. ii) Bandwidth - It denotes the maximum no of data that can be passed within a given period of time.

Serviceability / Manageability- Serviceability means the speed with which the system can be repaired. If the time is taken to repair the system increases, then its availability decreases.

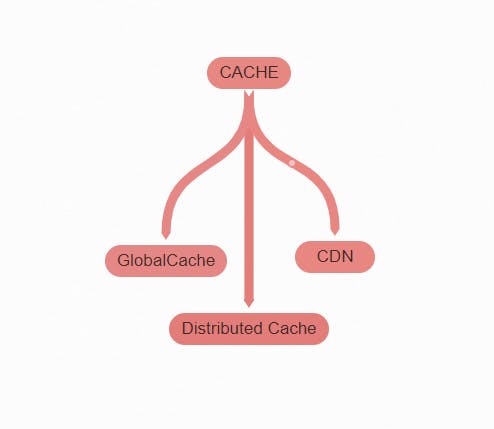

- Cache - The Cache is short-term memory, typically faster memory. If any data is frequently needed we can store it in cache memory and fetch it from there. It will require less time to fetch data from cache memory than fetching it from the original data source.

Why to use Cache - i) To reduce network calls. ii) To reduce the load on the Database.

Types of Cache-

Cache Writing Strategies-

Write-Through Cache- In this type, data is written in the cache as well as in the database. Here the risk of data loss is low but writing time is doubled so it results in high latency for write operations.

Write-Around Cache- In this type, data is written directly to the storage bypassing the cache. This method is useful when we don't require to read data frequently. There's one flaw in this strategy that if we tried to read recently written data from the cache it will result in "Cache miss".

Write-Back Cache- In this type, data is written to cache alone and confirmation is given to the client immediately. The write operation to storage is done after a specific interval of time. This result in low latency for write-intensive application. However, this speed comes with the risk of data loss in case of a system crash or other adverse events.

Cache Invalidation- If the data in the database is modified then it should be invalidated in the cache too, if not then it will result in ambiguous behavior of the application. We can use the writing strategies discussed before to write new data in cache. However, there are more strategies that we can use which are-

i) TTL (Time To Live) - In this, after specific time data in the cache will be invalidated

ii) Meta Data Info- In this, meta info about each record is stored in the database as well as in the cache. Meta info of record in the database is kept up to date with modified data. Whenever we access any record from cache we simply compare both meta info in cache and database record if it's the same then we fetch data from cache and if it is different then we replace the cache data record with the record present in the database.